Living Sculpture is an interactive experience developed as a class assignment under the theme “Living Archive,” exploring how we can create and interact with new datasets using machine learning.

Inspired by sculptures in The MET Open Access Collection, I began by observing how museum visitors often mimic sculptures' poses when taking photos. This project builds on that interaction—with a twist—by focusing specifically on Buddhist sculptures. Growing up, I often found temple visits and these sculptures dull, so I wanted to reframe that experience into something playful, engaging, and accessible.

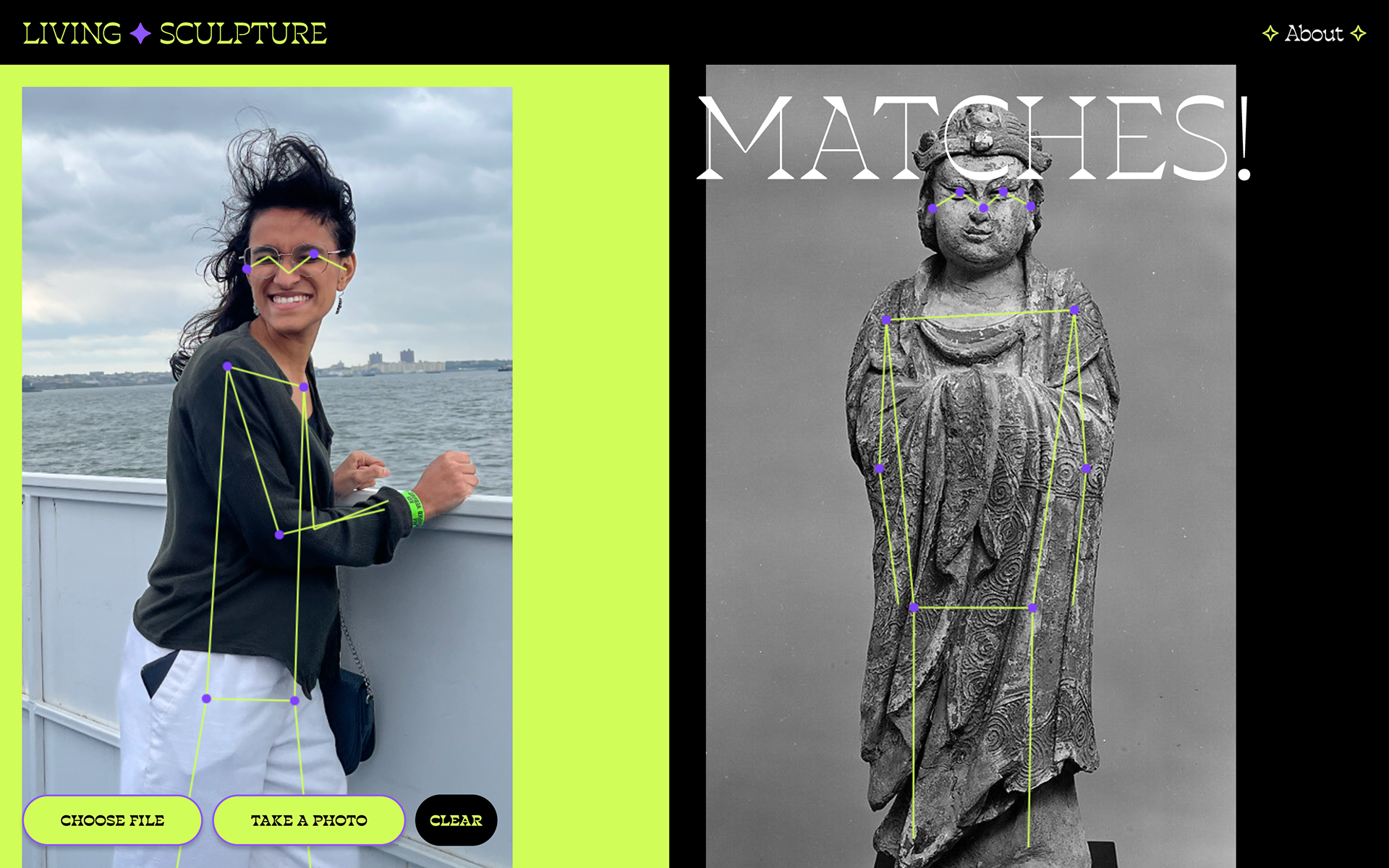

In this project, users can upload or capture their own photo, and the system analyzes their body pose using ML5.js. It then matches the pose to the closest one found among nearly 200 Buddhist sculpture images from the archive. I used ML5.js’s pose estimation and trained a dataset of sculpture poses by calculating the distance between key points (with a help from GPT Copilot for the math). When a user submits an image, their pose is analyzed and compared to the closest match in the dataset.

Since the archive images are limited, the accuracy of pose-matching is still rough in this prototype. But I believe the concept can be expanded with a larger dataset for richer interaction. This project is, for me, a playful exploration of machine learning libraries, user interaction, and cultural reinterpretation.